Optimizing Agents : Why it matters and how to do it?

Today, agents are not just making static predictions, they are chaining decisions, retrieving documents, acting on APIs, and even reasoning recursively. How optimized is your agent — its prompt, model configuration, and reasoning structure? In this article, we’ll explore how to optimize agents?

Let’s begin with a simple definition of agents.

AI agents are systems or programs capable of autonomously performing tasks on behalf of a user or another system. At their core, agents combine four key ingredients: a language model, a prompt, memory, and tools. This blend forms a smart system designed to carry out a specific job or class of tasks.

If you accept that the language model and the prompt are central to an agent's performance, then it naturally follows that prompt engineering becomes a critical part of building agentic applications.

Whether you're using Chain-of-Thought, ReAct, Prediction, or other strategies, a well-structured prompt tailored to your model can be the differentiator between a mediocre agent and an exceptional one.

That’s where agent optimization comes in.

Why Optimize an Agent?

In a typical LLM-powered agent, there are many optimization touch points:

- Prompt: The instruction clarity, tone, and examples influence LLM behavior.

- Memory: What the agent remembers, forgets, and retrieves.

- Tool: When and how it decides to use tools like search, DBs, APIs.

- Reasoning strategy: Whether it uses Chain-of-Thought, scratchpad reasoning, etc.

Even if you have a great language model like GPT-4o, a poorly constructed prompt or program logic can yield inconsistent results. Optimization helps you align your agent's input → output behavior with your desired goal → outcome.

Imagine a RAG-based agent that initially generates outputs based on limited knowledge. Over time, as it receives feedback and identifies usage patterns, it can optimize its performance using fine-tuned instructions and curated few-shot examples.

DSPy: Your Optimization Toolkit

DSPy is a lightweight, declarative framework designed specifically for creating AI applications - agents and specially optimizing agents.

It allows you to:

- Define task signatures using input/output schemas.

Implement logic modules like ChainOfThought, TypedPredictor, and ReAct.

- Optimize prompts and in-context examples using optimizers like

MIPROv2,BootstrapFewShot, and custom reward functions.

MIPROv2

The Multiprompt Instruction Proposal Optimizer Version 2(MIPROv2), an optimizer developed as part of Stanford’s DSP (Demonstrate–Search–Predict) research and implemented in the open-source DSPy library.

Unlike traditional prompt engineering, which relies heavily on trial and error, DSP focuses on systematic prompt optimization. MIPROv2 elevates this by automatically discovering the most effective combinations of instructions and few-shot examples that produce accurate, high-quality outputs tailored to your model’s behavior.

In essence, MIPROv2 helps you move from guesswork to guided refinement, using real task data and performance metrics to iteratively improve your prompts.

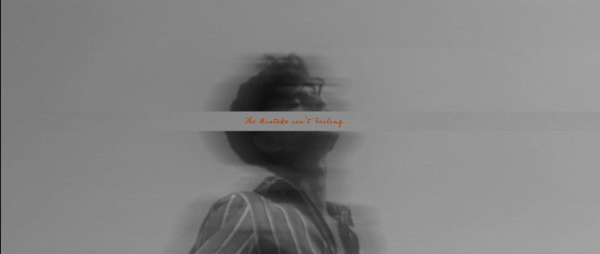

Following how the optimizer works:

- First, It takes your program, which may be unoptimized at this point, and runs it many times across different inputs to collect traces of input/output behavior for each one of your modules. It filters these traces to keep only those that appear in trajectories scored highly by your metric.

- Second, it previews your DSPy program's code, your data, and traces from running your program, and uses them to draft many potential instructions for every prompt in your program.

- Third, it samples mini-batches from your training set, proposes a combination of instructions and traces to use for constructing every prompt in the pipeline, and evaluates the candidate program on the mini-batch.

Using the resulting score, MIPRO updates a surrogate model that helps the proposals get better over time.

Example: A Movie Recommender Agent (with Pydantic agent + DSPy)

We’ll walk through a simple agent that recommends a movie based on a user’s mood. The agent will be built using:

- pydantic-agent for input/output schemas

- gpt-4o as the backend model

- DSPy for optimizing the prompt using MIPROv2

Construct the agent

# agent.py

from pydantic import BaseModel

from pydantic_agent.agent import PydanticAgent

from pydantic_agent.llms import OpenAIChat

class MoodInput(BaseModel):

mood: str

class MovieOutput(BaseModel):

movie_name: str

reason: str

llm = OpenAIChat(model="gpt-4o")

DEFAULT_PROMPT = (

"You are a helpful movie expert. Recommend a movie to someone who feels {mood}. "

"Return the movie name and a short reason."

)

def create_agent(prompt: str = DEFAULT_PROMPT) -> PydanticAgent[MoodInput, MovieOutput]:

return PydanticAgent[MoodInput, MovieOutput](

llm=llm,

prompt_template=prompt,

)

Training examples

# examples.py

trainset = [

{"mood": "happy", "movie_name": "The Secret Life of Walter Mitty", "reason": "It celebrates wonder and optimism."},

{"mood": "sad", "movie_name": "Inside Out", "reason": "It helps process emotions and find hope."},

{"mood": "nostalgic", "movie_name": "The Goonies", "reason": "A perfect throwback to childhood adventures."},

{"mood": "anxious", "movie_name": "Finding Nemo", "reason": "Its calm pacing and hopeful message ease anxiety."},

]

Optimize the agent prompt

# optimizer.py

import dspy

from dspy.teleprompt import MIPROv2

from examples import trainset

class RecommendMovieSignature(dspy.Signature):

mood = dspy.InputField(desc="The user's mood")

movie_name = dspy.OutputField(desc="Recommended movie title")

reason = dspy.OutputField(desc="Short justification")

def optimize_prompt():

dspy.settings.configure(lms=dspy.OpenAI(model="gpt-4o", max_tokens=300))

train_data = [

{"mood": e["mood"], "movie_name": e["movie_name"], "reason": e["reason"]}

for e in trainset

]

optimizer = MIPROv2(metric=dspy.evaluate.metrics.answer_exact_match)

optimized_program = optimizer.compile(signature=RecommendMovieSignature, trainset=train_data)

return optimized_program.prompt_template()

Use optimized agent

# main.py

from agent import create_agent, MoodInput

from optimizer import optimize_prompt

if __name__ == "__main__":

optimized_prompt = optimize_prompt()

agent = create_agent(prompt=optimized_prompt)

input_data = MoodInput(mood="reflective")

result = agent.run(input_data)

print(f"Movie: {result.movie_name}")

print(f"Reason: {result.reason}")

If you will evaluate the DEFAULT_PROMPT and optimized prompt, you will see the rate of accuracy with optimized prompt is huge, even without any manual engineering.

Final Thoughts

As AI agents become more central to real-world systems — copilots, advisors, planners — optimization will define their success. By combining libraries like Pydantic-agent/Langchain/DSPy for structure and DSPy for optimization, you create agents that are:Smarter, Cheaper to run, Easier to maintain and Better aligned with your business goals

Don’t stop at building agents — optimize them.

What more?

Prompt optimization is just one example, you can use bootsrtapfinetune to fine tune the LM itself, define their weights and more.

References