Forget Prompts—Control AI with Context Engineering

Prompt engineering has been a go to strategy for interacting with LLMs. As AI systems are maturing and enterprises are incorporating AI solutions, prompt alone is not enough, we need to control AI through contexts. More in the article...

If what you're reading feels familiar—something you're already practicing—then you might already be on the path. Context Engineering may not be entirely new in concept, but it's a term that’s rapidly gaining traction because it formalizes a crucial skill for building efficient and powerful AI systems.

Prompt engineering, while useful, was always a stepping stone—an early attempt to control language models using cleverly crafted inputs. But it’s limited, brittle, and often unreliable at scale. Context Engineering goes further. It’s about systematically managing structured, persistent, and dynamic context to guide large language models (LLMs) toward predictable and high-quality outcomes.

In this article we will break down the understanding, we will cover:

How it is different?, What all elements compose the context?, and Why it is a new hottest skill?

What is Context Engineering?

Context engineering is an abstraction layer focused on crafting relevant and comprehensive context so that LLMs can generate accurate and expected results. In a nutshell, it goes beyond just writing a prompt—it's about integrating personalization, knowledge retrieval, and structured guidance to produce meaningful and reliable outputs.

Getting inspired from Andrey Karpathy’s post and his mentions in LLamaIndex article, here is a summary :

People associate prompts with short task descriptions you'd give an LLM in your day-to-day use. When in every industrial-strength LLM app, context engineering is the delicate art and science of filling the context window with just the right information for the next step.

The article by LlamaIndex makes a great point—and you might be thinking the same: “Isn’t this just RAG? I’m already doing that.”

Well, think of Context Engineering as a broader abstraction—one that includes retrieval but goes far beyond it. It’s not just about fetching relevant documents; it’s about managing the entire context window, integrating memory, system-level instructions, tool usage, and dynamic state to ensure the model performs consistently and intelligently.

Context Engineering : Producing enterprise ready solutions

Prompts with added retrieval can add value for quick demos or narrowly scoped solutions. However, they often lack true context—such as the history of previous conversations, the user's persona, or the appropriate way to represent information for a specific use case.

In short, while retrieval helps, it’s the full context window—including memory, personalization, and structural guidance—that enables consistent, relevant outputs and a truly personalized AI experience.

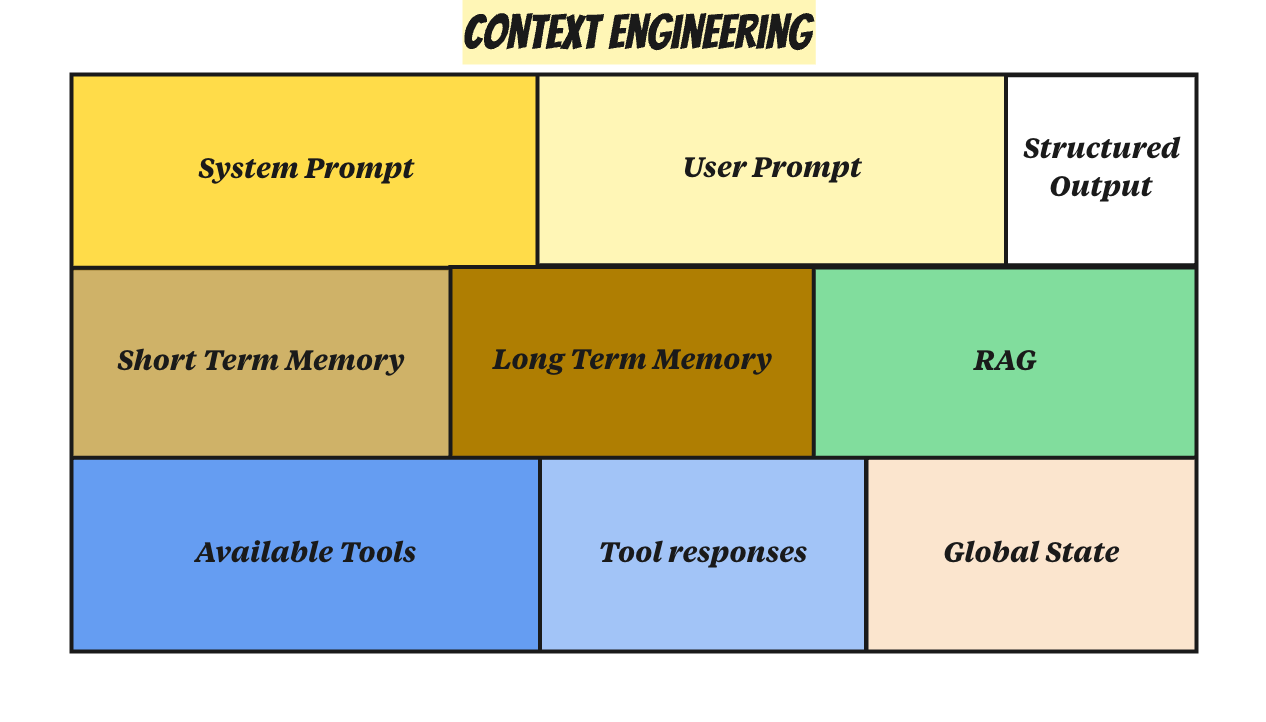

Following is the presentation of context and what all this abstraction holds :

Each block represents a type of contextual signal that contributes to how an AI system understands and responds.

Here’s a detailed description of each component:

System Prompt

- Definition: The foundational instruction that sets the behavior, tone, and role of the AI.

- Purpose: Establishes default boundaries, rules, and personality (e.g., "You are a helpful assistant trained in finance").

- Why It Matters: Shapes the model’s baseline behavior across interactions — like a startup script for AI.

User Prompt

- Definition: The real-time, user-issued query or instruction.

- Purpose: It’s what the user wants now (e.g., "Summarize this document").

- Why It Matters: Immediate task focus. Without the other layers, it’s fragile and isolated.

Short-Term Memory

- Definition: Temporary memory within the current session/conversation.

- Purpose: Tracks recent exchanges, user preferences, in-progress tasks.

- Why It Matters: Enables continuity, avoids redundancy, supports follow-ups (e.g., “what do you mean by that?”).

Long-Term Memory

- Definition: Persisted knowledge across sessions, users, or systems.

- Purpose: Retains facts, preferences, or learning over time.

- Why It Matters: Personalization, continuity, and improved relevance across sessions.

RAG (Retrieval-Augmented Generation)

- Definition: Dynamically retrieves relevant external knowledge (from databases, vector stores, etc.).

- Purpose: Brings in grounded, contextual information before the AI generates a response.

- Why It Matters: Reduces hallucination, boosts domain knowledge, enables enterprise-specific answers.

Available Tools

- Definition: External functions the AI can call (e.g., search, calculator, database lookup).

- Purpose: Extends the AI’s capabilities beyond language generation.

- Why It Matters: Allows action-taking, real-time data access, and execution beyond its model knowledge.

Tool Responses

- Definition: Results from the tools that the AI previously invoked.

- Purpose: Feeds into the next generation step for reasoning or follow-up.

- Why It Matters: Enables multi-step reasoning (e.g., API result → summarize → next action).

Global State

- Definition: Application- or session-level metadata, goals, or status flags.

- Purpose: Encodes broader context — e.g., user’s role, active project, mode (draft vs publish).

- Why It Matters: Enables intelligent behavior across workflows or across agents in multi-agent systems.

Structured Output

- Definition: Enforcing a specific output format (e.g., JSON, tables, markdown).

- Purpose: Ensures outputs are machine- or workflow-compatible.

- Why It Matters: Enables integration, reliability, and automation. Crucial in productized AI systems.

Why it is a hottest skill?

Because it’s the key to achieving production-grade results with AI. It's not just about making LLMs respond—it's about making them respond intelligently, consistently, and personally.

Let’s break it down with a simple hypothetical example. Imagine you're building a conversational agent that provides movie recommendations. The user has been interacting with the system for a while.

Experience without context:

User: Provide me list of highly rated action movies ?

Agent: Here are top 5 movies for you: A, B,C, & D

Experience with context:

User: Provide me list of highly rated action movies ?

Agent: Since your favorite actor is Tom Cruise and you're interested in thriller concepts, here are the top 5 movies tailored for you: D, C, K, A, F.

I hope the example explains it all and I don't have to influence you on the need of context and how it adds value.

Context Window,

Context Drift,

Privacy & Security, etc.

But rather than deterring us, these limitations highlight the importance of mastering this niche skill. The complexity demands intentional design, thoughtful orchestration, and precision in how we build with AI.

Are you ready to lead the next wave of AI development through context engineering?