Add "FLAIR": Applying AI in FAIR Data Principles

The concept is to evolve FAIR into FLAIR by integrating Learning, making AI a native component of data handling and processing.

Lately, I've been thinking out loud (as one does) about the way we handle data and how AI is becoming such a natural part of our day-to-day operations. It feels like every process, every tool, and every decision is now adapting AI. So, it got me thinking—why not apply that same evolution to our data principles?

The well known data principle, FAIR — Findable, Accessible, Interoperable, Reusable

We’ve all been working with FAIR principles for a while now and they've served us well. But as we dive deeper into this AI-driven world, it's probably time to give these principles an upgrade. And that’s where I propose "FLAIR".

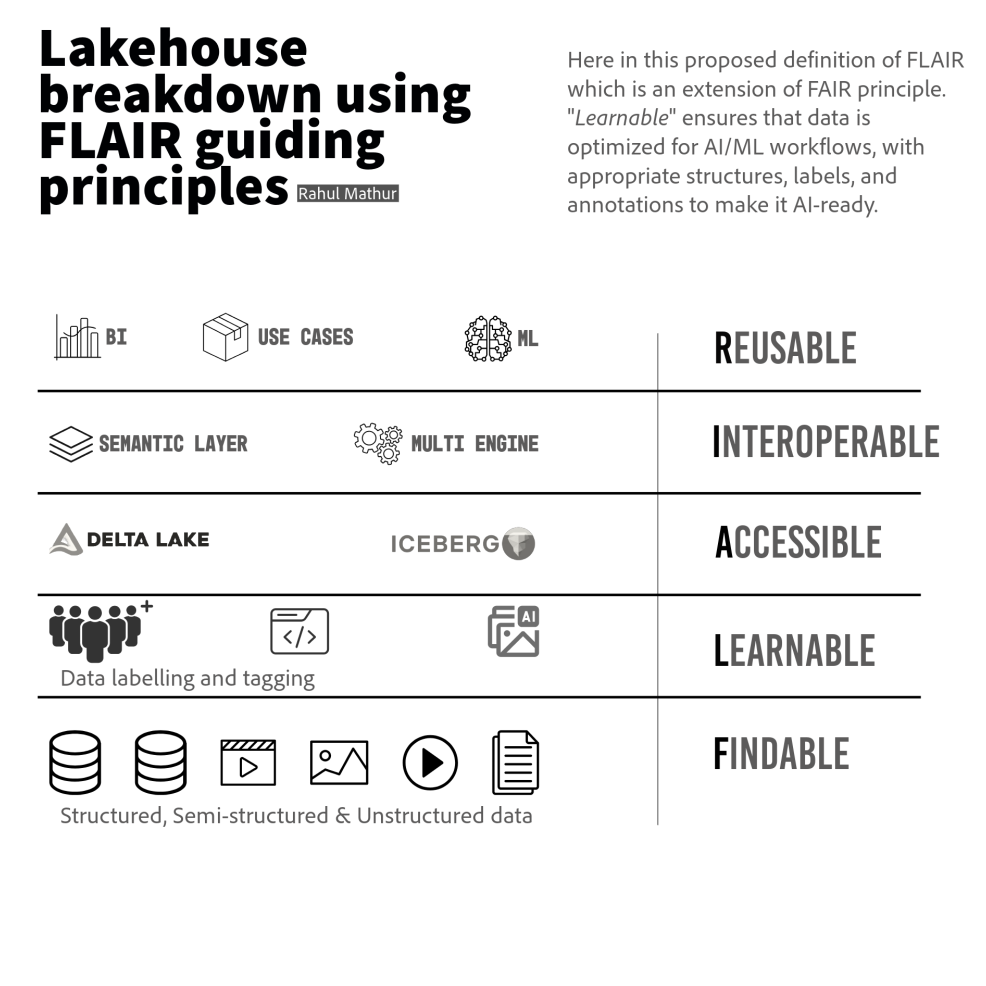

FLAIR - Findable, Learnable, Accessible, Interoperable, Reusable

FLAIR adds AI into the mix, making it a natural part of how we manage data. Here's how it would change things:

- Findable: When a new data request is made, the system should ensure that the required data can be easily located. This could involve searching across metadata catalogs or registries where data assets are indexed and tagged with relevant metadata to allow for easier discovery. AI-enhanced systems can autonomously surface relevant data, offering advanced recommendations beyond just metadata searches.

- Learnable: It focuses on preparing data in a way that is optimized for machine learning and AI applications. This involves data labeling and tagging so that AI models can effectively interpret and learn from it. The labelling and tagging can be performed by in-house staff, crowdsourcing, outsourcing, and programmatic labeling.

- Accessible: Once the requested data is located, the system should confirm whether the user has the appropriate access permissions based on the established protocols. If required, the system should facilitate the process of obtaining access in a secure and compliant manner, ensuring adherence to privacy regulations or user roles. Imagine accessing data through simple voice commands or intuitive chatbots. AI agents can make interacting with data feel effortless, even for non-technical folks(with proper authz & compliance).

- Interoperable: The requested data may need to come from multiple sources or systems, potentially in different formats. The FAIR principles ensure that data can be combined or transferred between systems without significant friction. Where at this layer the AI agents can easily handle complex integrations and data connections across systems, making sure everything works seamlessly together.

- Reusable : Data should be structured in a way that it allows others to reuse it in different contexts, such as for research replication or new analyses. AI doesn’t just help with reusability—it ensures data is reused in a way that’s responsible, considering privacy, ethics, and compliance automatically.

Consider data lakehouse to adapt the FLAIR principle, following will be the high level breakdown to understand further :

Credits: Above design is an influence & extension of of databricks article on FAIR.

This idea of FLAIR is a loud thinking, but I wanted to put it out there because it feels like the natural next step. As we go AI-native in everything else, it’s time to enhance FAIR with FLAIR and let AI help us handle data smarter and faster.